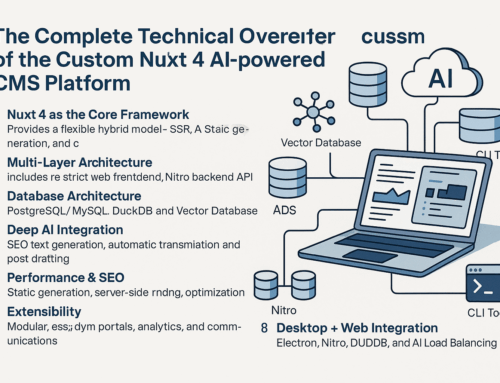

Aaasaasa CLI – Multi-Provider AI Tool

Aaasaasa CLI is a lightweight, fast, and extendable command-line interface

designed for working with multiple AI providers in a unified way.

It was built with the idea of combining local AI inference,

cluster-based AI scaling, and cloud AI APIs

into one seamless workflow.

🚀 Key Advantages

- Unified interface for Ollama, VLLM clusters, and OpenAI

- Supports multiple

endpointswith advanced load balancing - Profiles system: switch between local, cluster, and cloud setups instantly

- Lightweight & fast — written in Rust

- Configurable with simple

~/.config/aaasaasa-cli/config.json - Ready for extension (custom providers, optimizers, balancing algorithms)

⚙️ Technologies

- Rust for speed and safety

- Tokio async runtime for concurrency

- Reqwest for HTTP requests

- Clap for CLI argument parsing

- Serde for JSON configs

📂 Example Configuration

{

"profiles": {

"local-ollama": {

"provider": "ollama",

"endpoints": ["http://10.0.0.11:11434", "http://10.0.0.12:11434"],

"balance": "least_conn",

"model": "qwen2.5:7b-instruct-q5_K_M"

},

"cluster-vllm": {

"provider": "vllm",

"endpoints": ["http://10.0.1.21:8000", "http://10.0.1.22:8000"],

"balance": "p2c",

"model": "llama-3-70b-instruct"

},

"openai-fast": {

"provider": "openai",

"endpoint": "https://api.openai.com/v1/chat/completions",

"balance": "round_robin",

"model": "gpt-4o"

}

},

"default_profile": "local-ollama"

}

💻 Usage Examples

# Start chat session with default profile

3a2a-cli chat "Hello 3a2a!"

# Use OpenAI profile

3a2a-cli -p openai-fast chat "Write me a poem."

# REPL interactive mode

3a2a-cli repl

# Show config path

3a2a-cli where

🌐 Real-World Use Cases

- Run local inference for speed-sensitive tasks

- Distribute workloads across multiple GPUs using cluster mode

- Fallback to OpenAI API for high-accuracy tasks

- Integrate into web applications and automation scripts

📈 Roadmap

- AI-based auto-balancing between endpoints

- Support for more providers (Anthropic, Mistral, etc.)

- Cluster orchestration tools for large models

- WebSocket & high-throughput pipelines

📜 License

This software is proprietary and licensed under the 3a2a™ EULA